This is it. Hooray! The final Cube as I always wanted it to be.

It just took me about 2 years of planning, blood, sweat & tears, huffing and puffing. Many tries to find the right parts, plenty materials evaluated always trying to keep the budget low.

Meet the ancestors

You might have followed the route I took for quite some time now:

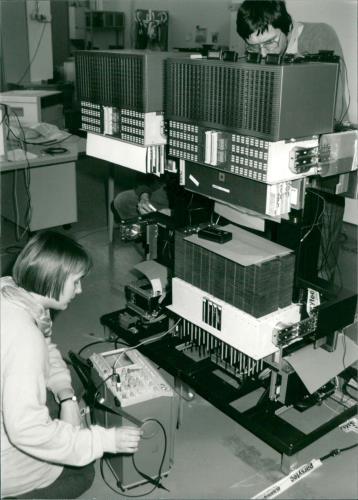

It began with the ‘Tower of Power‘, basically a component carrier with a power-supply.

After some years it was replaced by the first Cube. Well, yes, while it had a somewhat cubic’ish case, is still was just a dull standard industrial case. Not really what I imagined how my computer should look.

Form follows function

If you’ve read some posts here on GeekDot, you might already got the impression that I’m a sucker for design. Well, not that kind of a surprise, given I studied design some decades ago 🙄

I’m also heavily influenced by the works and philosophy of Dieter Rams (mainly for BRAUN) and Hartmut Esslinger (of frog design), of which you might not have heard about, but you know their designs for sure…

So I fell in love with the Parsytec x’plorer and other iconic computer designs like these ‘cubistic’ examples:

Yes, I am a strong believer that a computer, while basically being a rather boring calculation tool, should look good, timeless and might give you an idea of its innards are actually doing something.

We could probably go on forever, defining how a well designed computer should look like. But like the Romans used to say: “non potest argui per gustum” (You can’t argue about taste)…

Let’s say, I’m probably not totally off, given that most designs I like are also on display at the Museum Of Modern Art 😉

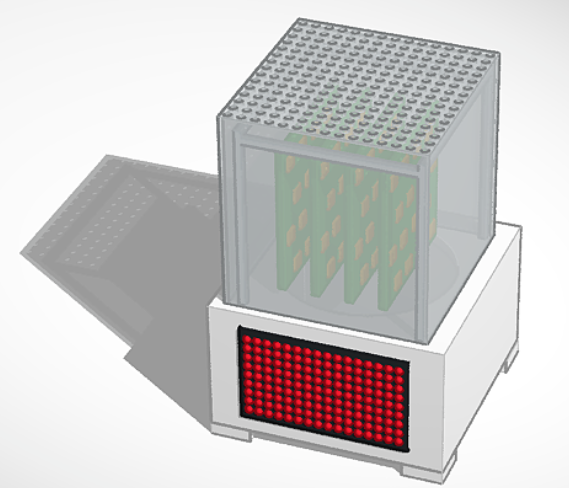

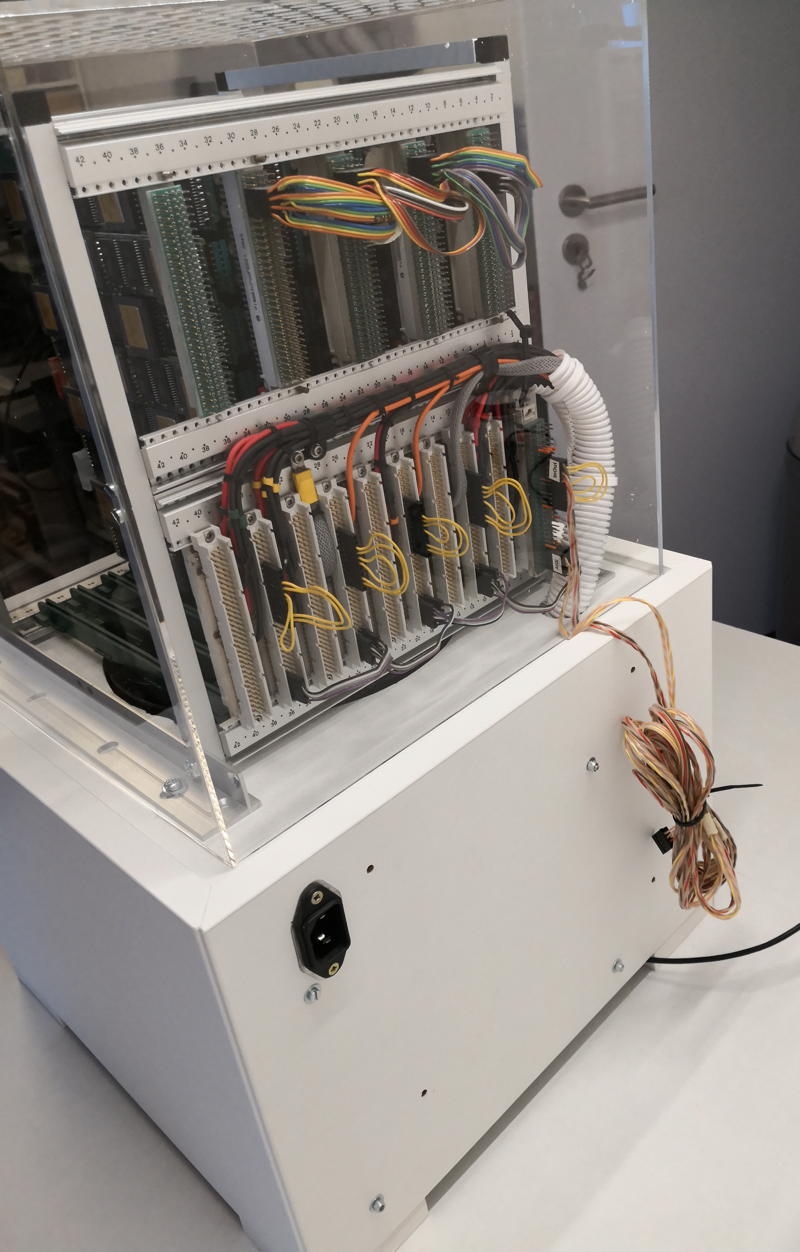

So mentioning the Final Cubes design, it’s case we’re talking about: If you’re really picky, then yes, the Final Cube is actually two cubes:

The carrier-cage on the top which I tried to keep simplistic and invisible to give the PCBs as much stage as possible. The user should be able to see the many, shining CPUs. So 10x10mm aluminum square tubes are connected by 3D-printed frame-corners to provide maximum view onto the technology.

For protection but also as a design statement and tribute to Rams’/Gugelots famous ‘snow white’s coffin‘ everything is surrounded by a 30x30x30cm translucent acrylic cube.

The white base actually isn’t cubical at all being much wider than tall. Nevertheless, its design should be even more simplistic and cautious to serve three purposes:

- Give the computing-parts above it a proper podium

- House the LED array which provides the fitting aura

- …and finally house all the tech the user should not care about

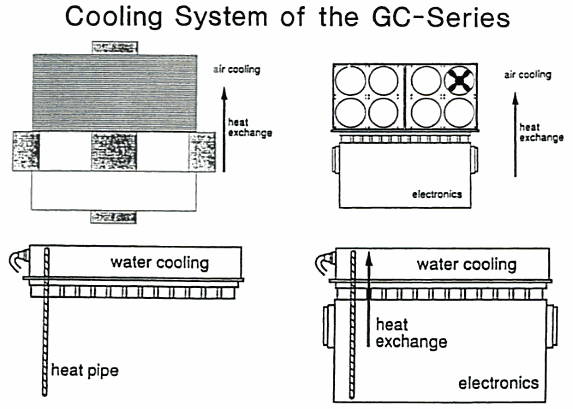

With quite a big fan between the base and the top both work like a chimney (following the convection) sucking the air from the bottom and blowing it through 169 holes in the top plate of the cube.

Here’s an idea out how it looks “working”:

When one thing comes to another

The parts of which the Final Cube is build from aren’t all created this year. Actually only the case and the cage-frame are from 2019 – all other parts were designed by me some years before.

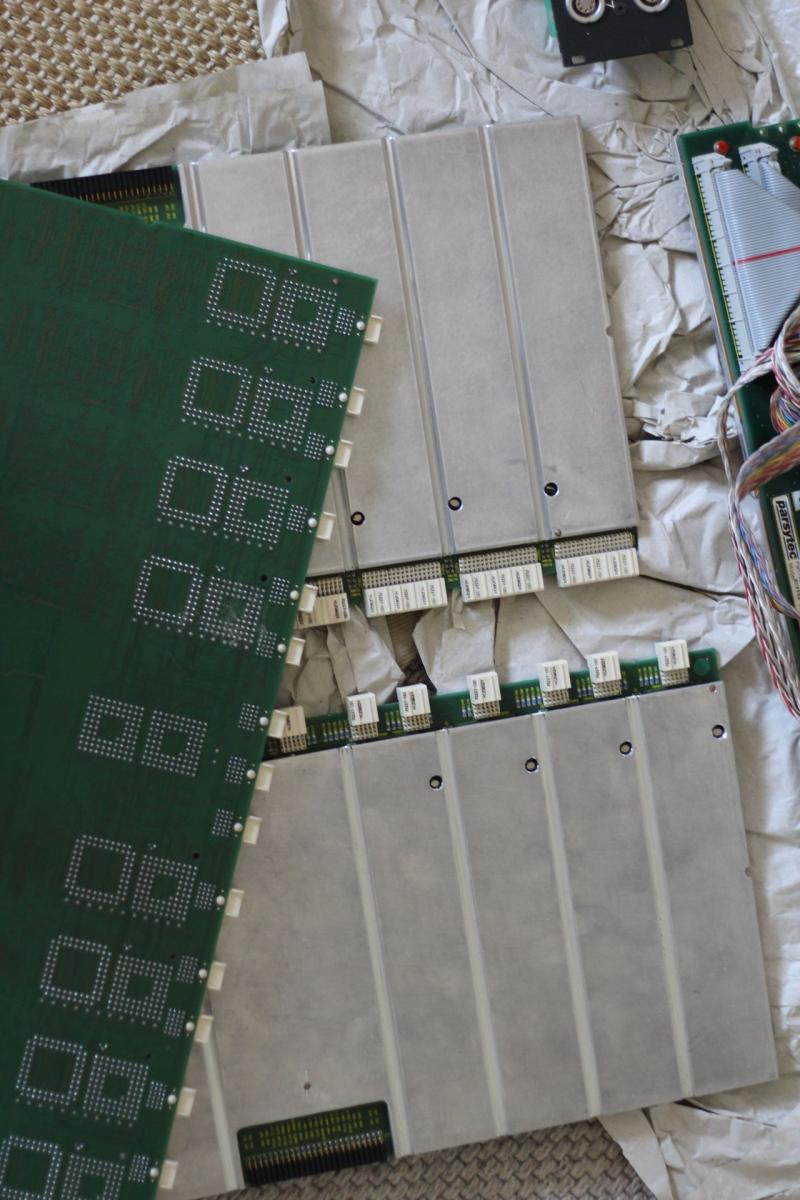

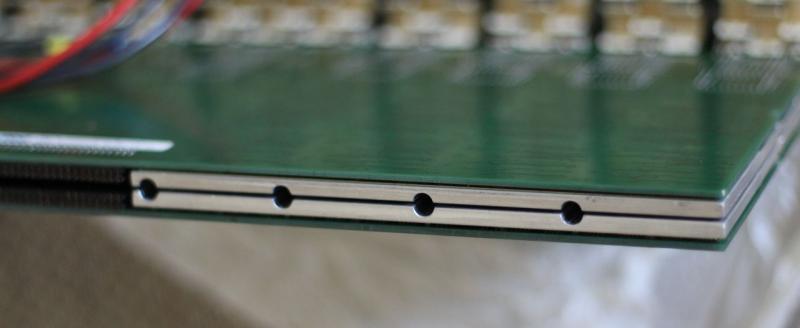

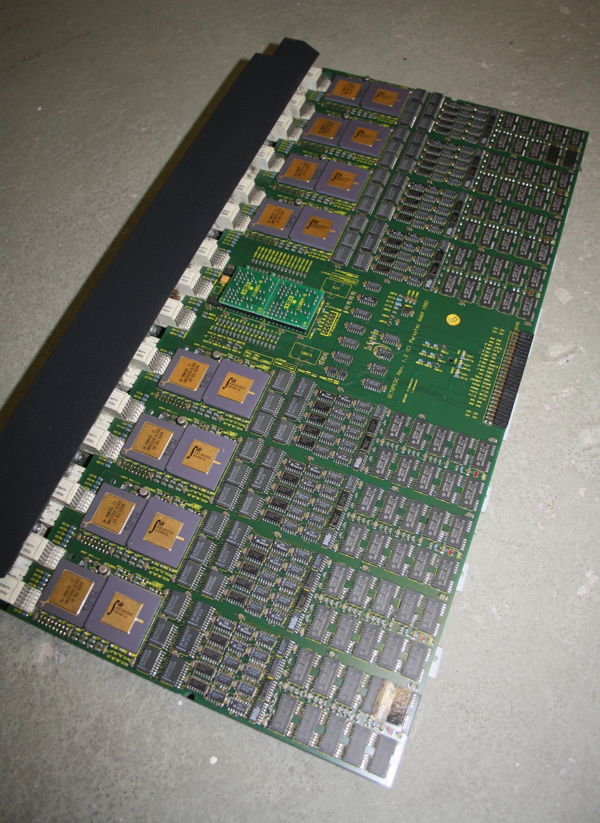

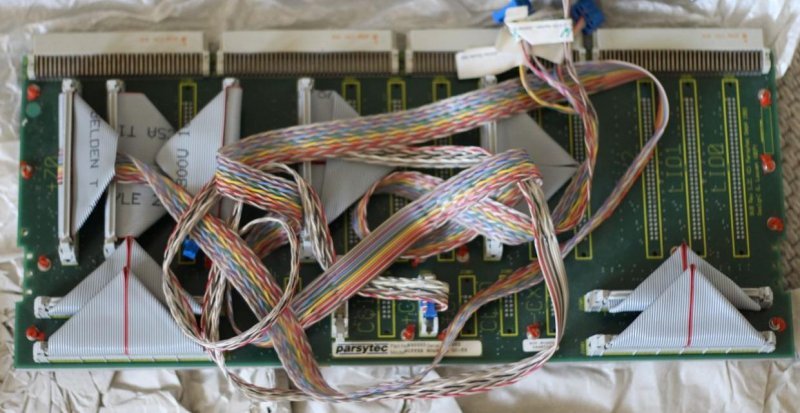

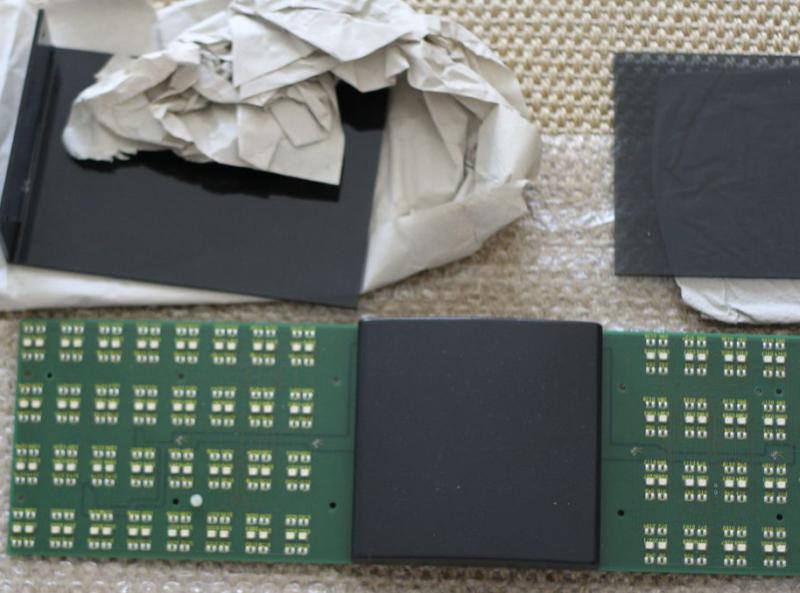

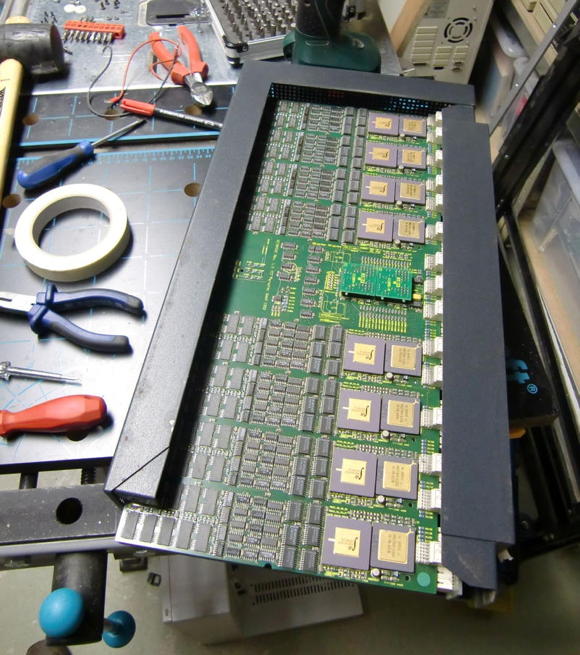

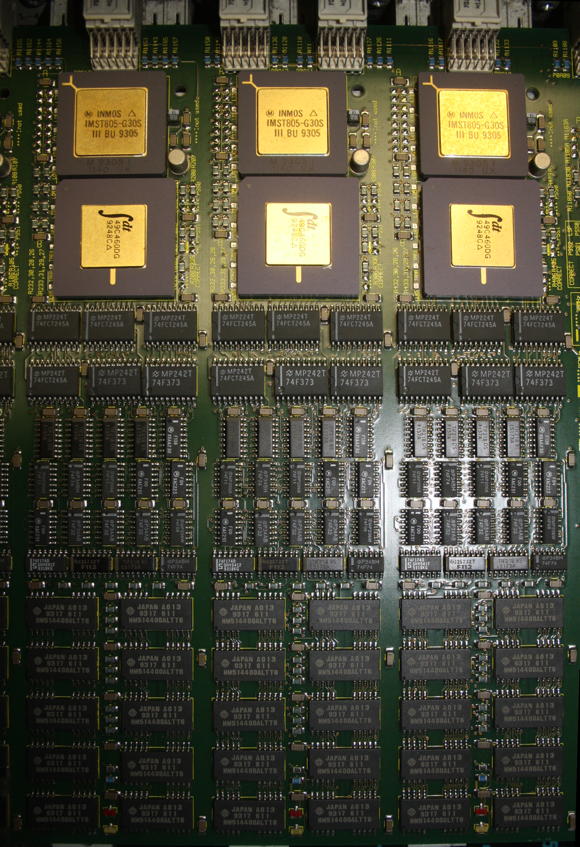

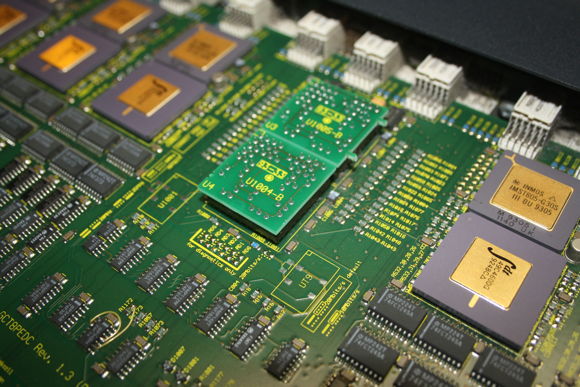

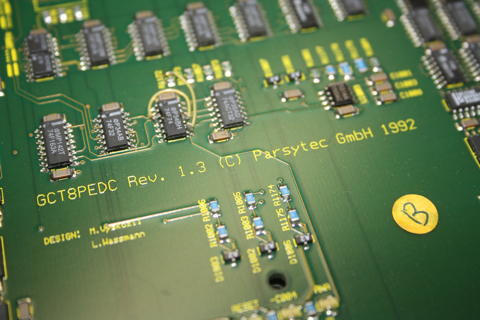

The core of everything are TRAMs – these are Transputer computing modules defined by Inmos back in 1990. The specific TRAMs used are my own AM-B404, each containing a 25MHz T800 and 2MB of fast SRAM.

16 of these TRAMs are placed onto an Inmos B012 (or compatible) carrier board. And up to 10 of these carriers can be put into the Cubes carrier-frame creating the cluster.

Under the hood

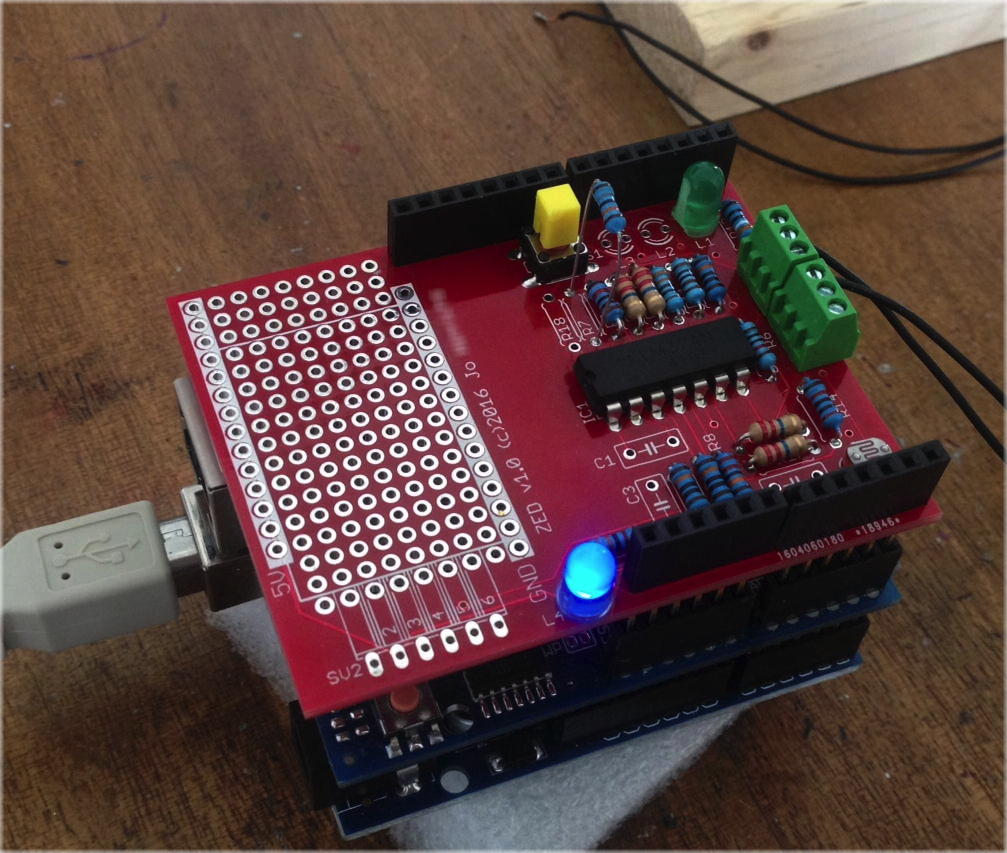

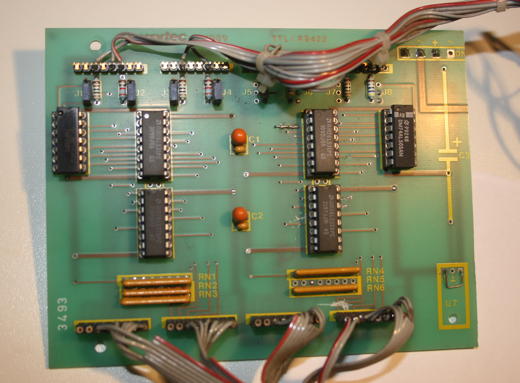

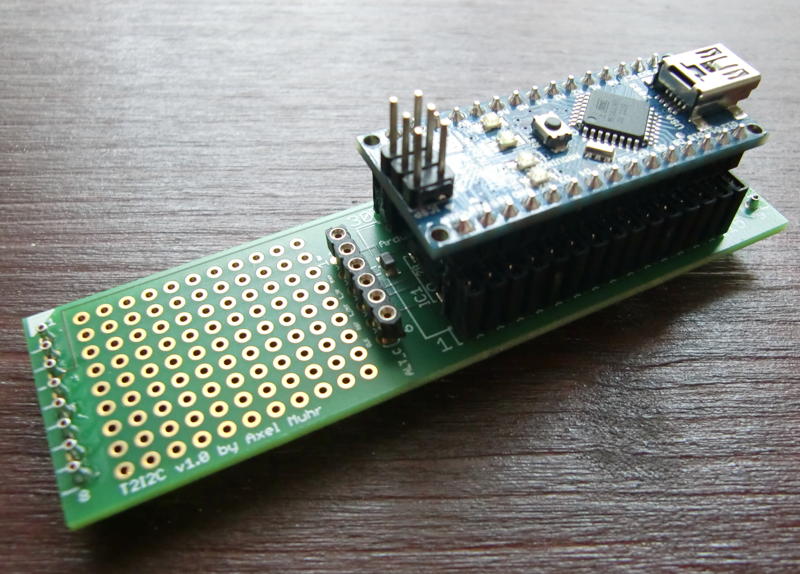

Below the carrier-frame, in the base, you can spot a 32×16 LED panel. This one is actually from 2012 when I designed the T2i2c, an i2c-bus to Transputer TRAM.

So it was a natural move to make the T2i2c into a system-controller. It does not only controls the LEDs displaying the current load of all Transputers, but also using a photo-diode to set the display brightness as well as measuring the internal temperature and overall power consumption.

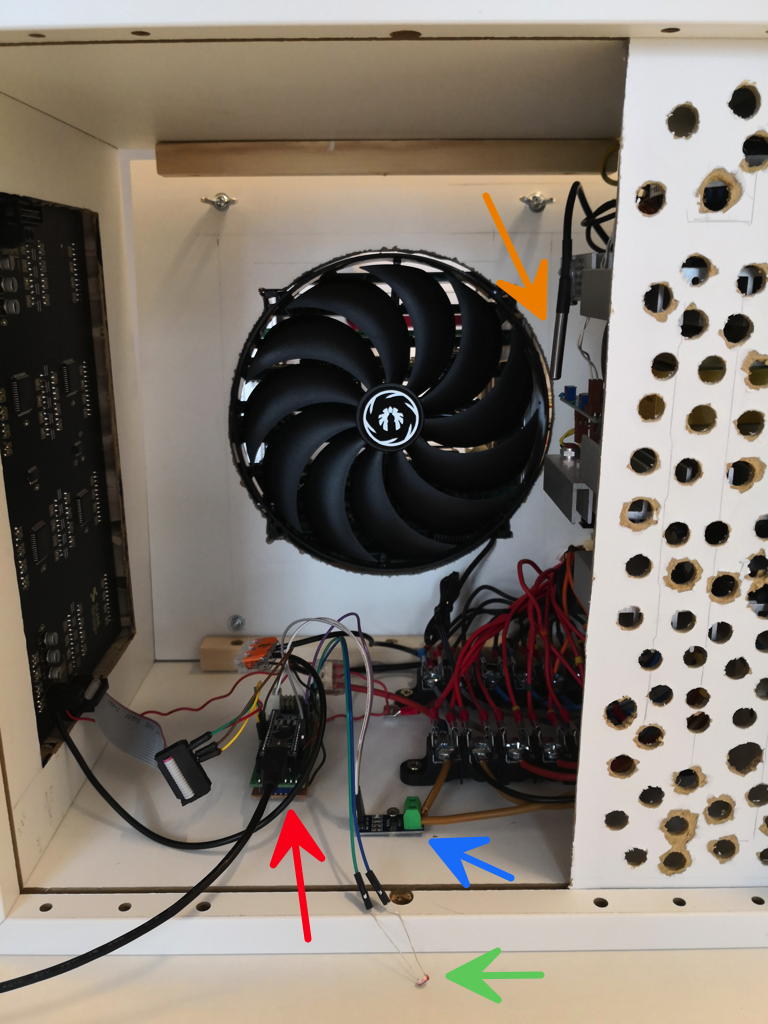

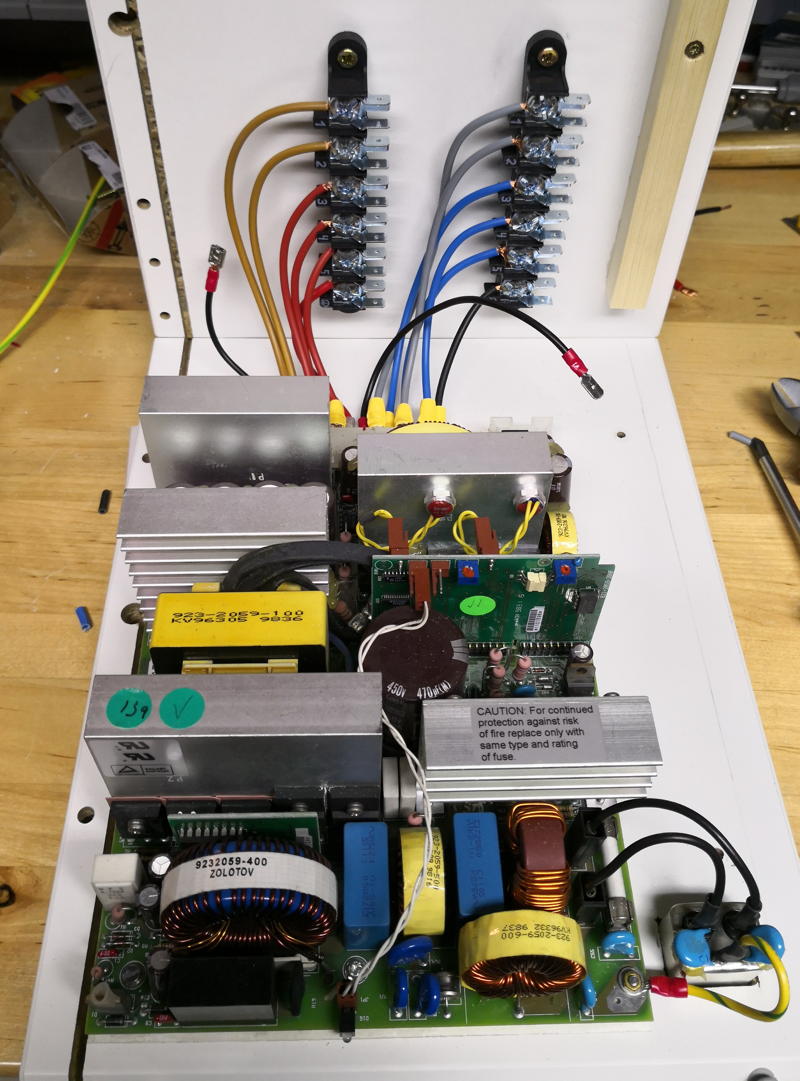

Here’s an overview of the base internals:

The red arrow points to the T2i2c being connected to the LED panel to the left as well as to a hall-sensor (blue arrow) measuring the power consumption, a temperature sensor (orange) and a photo-diode (green).

You cannot overlook the 22cm fan in the back sucking air from the bottom along the power-supply and pushing it up to the Transputers above to keep them cool.

And their power consumption is not to be trivialized. In average a single Transputer TRAM requires is about 1 ampere… so the math is easy. This means the quest for a powerful power-supply was on.

After some months I found what used to be the power-supply meant for a 3Com Corebuilder 7000: The mighty 3C37010A. A whopping 90A@5V should be OK for starters… here’s the fitting procedure:

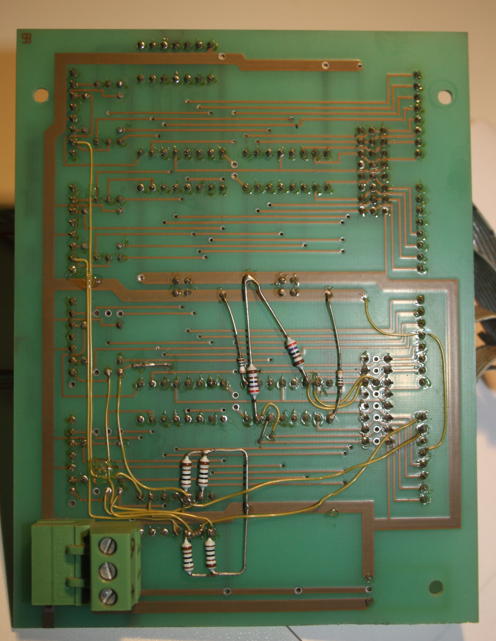

The back of the medal…

The backside did not change compared to the previous Cube back – well besides the supply-cabling which now goes down into the base instead to the side of the cage.

In consequence you’ll spot the power-connector there. No switch though – still thinking about that… as well as a nicer cable-management for the link-cable which is normally connected to the host.

Next up would be a host matching the look. Mhhhh….